Real Professional Software Engineering in the AI Era

In Part 1, we established that “vibe coding”—describing what you want and shipping what AI generates—creates productivity illusions that collapse spectacularly under production load. Part 2 explored the feedback loop that AI can’t replicate.

Now we confront the practical question: What skills define real professionals when typing code becomes trivial?

AI code assistants accelerate the mechanical part extraordinarily well. GitHub Copilot autocompletes functions. ChatGPT generates entire APIs from prompts. The typing is handled.

Yet you remain indispensable. Not in spite of AI’s code generation capabilities, but because of them.

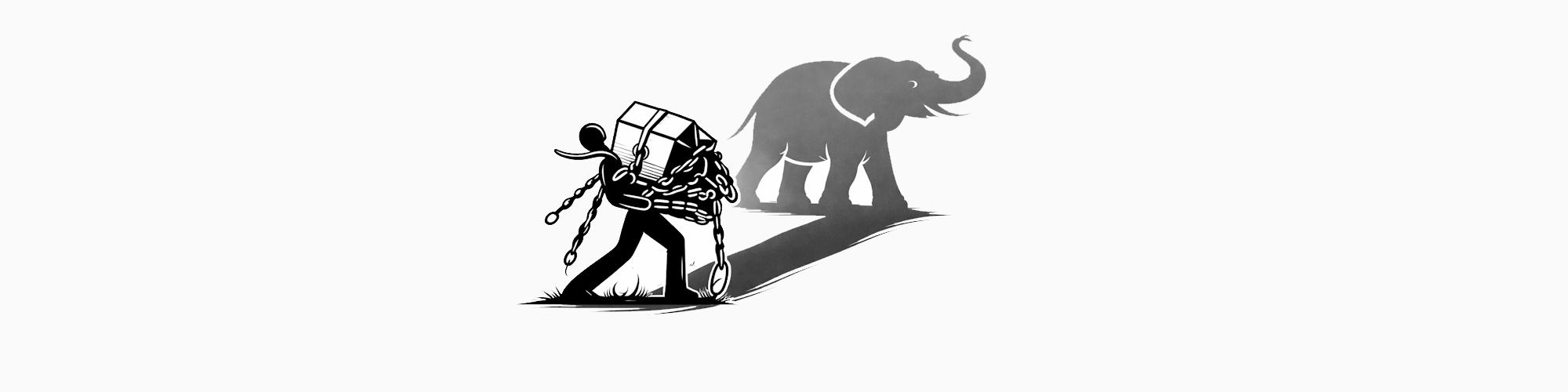

Why? When code generation becomes commoditized, the differentiator isn’t typing speed. It’s accumulated experience. Watching systems fail in production. Understanding why they failed. Applying that hard-won knowledge to prevent the next failure.

Here’s the uncomfortable truth: Organizations that confuse “lines of code generated” with “productivity” discover the difference when production incidents spike—and the bill arrives.

Why Prompt Engineering Isn’t Architecture

AI code generation creates a seductive trap.

You think: If I can describe what I want in natural language and get working code, isn’t that sufficient? Why spend time understanding implementation details when AI handles them?

Here’s why that’s wrong: Prompts describe intent. Not constraints.

And software engineering? It’s fundamentally about managing constraints. Performance budgets. Memory limits. Concurrency safety. Error handling. Maintainability. Operational cost. Security boundaries.

Consider asking an AI to “implement caching for customer data.” You’ll get code that caches. But you won’t get answers to:

- What’s the memory budget? When does caching become more expensive than repeated database calls?

- How do you handle cache invalidation across multiple application instances?

- What’s the consistency model? Can stale data cause correctness issues downstream?

- How do you monitor cache hit rates to verify it’s actually improving performance?

- What happens during cache warming? Do users experience degraded performance on cold starts?

AI generates code that addresses the prompt. Professionals understand these questions emerge from production experience—from watching systems fail, from debugging race conditions at 3 AM, from analyzing cost reports that show caching is more expensive than the problem it solved, from responding to incidents where stale cache data caused customer-visible bugs.

Prompt engineering optimizes for generating code quickly. Software architecture optimizes for systems that survive production reality. These are orthogonal skills.

I’ve seen teams adopt AI-heavy workflows where junior developers generate features rapidly using prompts, and senior developers spend weeks later refactoring the accumulated technical debt. The AI-generated code worked in isolation. It failed as a system because no one understood how the pieces interacted, what assumptions each component made, or where performance would degrade under load.

The skill that AI can’t replace: recognizing which questions to ask before writing code, not generating syntax after questions are answered. That recognition comes from the feedback loop—you write code, watch it fail, understand why it failed, and internalize the lesson.

Prompt-driven development skips this loop entirely, outsourcing both the implementation and the learning.

Real professionals don’t reject AI tools. They use them to accelerate the mechanical parts while maintaining ownership of the architectural decisions, performance analysis, and failure mode understanding that prompts can’t capture.

Technical Debt: Where Abstract Design Becomes Concrete Burden

Technical debt is abstract thinking’s deferred consequences manifesting as maintenance burden. Design decisions that felt reasonable in isolation accumulate into complexity that resists change, harbors bugs, and drains productivity.

Every architecture discussion includes statements like “we’ll refactor later” or “this is temporary” or “once we prove the concept, we’ll clean it up.” These are thought patterns that treat code as temporary scaffolding rather than operational reality. Code doesn’t stay temporary—it becomes production reality that teams maintain for years.

I’ve inherited codebases where “temporary” solutions from 2015 still run in production, calcified by dependencies and surrounded by defensive code that works around their limitations. The abstract thinking that justified shortcuts—“we’re moving fast,” “we’ll fix it in v2”—never accounted for the operational reality: v2 got deprioritized, teams changed, knowledge evaporated, and the technical debt persisted.

Microsoft’s own guidance on technical debt management emphasizes measurement and prioritization based on impact—not on abstract severity, but on actual operational burden:

“Prioritize technical debt items based on their effects on workload functionality. Focus on addressing the issues that have the most significant effect on the performance, maintainability, and scalability of the workload.”

This requires executable code that can be measured, profiled, and analyzed. Abstract architectural concerns translate into concrete technical debt only when code exists to evaluate. You can’t measure maintainability, performance impact, or operational cost without code that runs in production-like conditions.

AI-accelerated development amplifies this pattern.

When junior developers generate features using prompts, the code works immediately but accumulates technical debt invisibly. The AI optimized for “works now,” not “maintainable long-term.”

Six months later, when requirements change? The bill comes due. What took 2 days to generate takes 2 weeks to refactor. Why? Because no one understands the generated foundations.

Real cost: Senior developers spending 40+ hours untangling AI-generated code instead of building new features. That’s €4,000-8,000 in lost productivity—per feature.

Real Professionals in the AI Era: Mastering the Feedback Loop

David’s comment about real professionals not being replaced wasn’t wishful thinking or gatekeeping. It was recognition that professional software engineering has never been about typing code—and in an era where typing is automated, that distinction becomes brutally clear.

The skills that define professionals in 2026 and beyond:

Understanding Execution Characteristics

When AI generates code, professionals can read it and immediately recognize:

- Allocation patterns that will cause garbage collection pressure

- Database access patterns that create N+1 problems

- Synchronization primitives that risk deadlocks

- API contracts that will break under versioning

- Abstractions that trade clarity for cleverness

This isn’t about memorizing syntax. It’s about pattern recognition from seeing thousands of implementations and their production consequences. AI can generate the code. Professionals can predict where it fails before deployment.

Asking Questions AI Can’t Formulate

AI optimizes for the prompt it receives. Professionals know which questions to ask before prompting:

- What’s the failure mode if this service is unavailable?

- How does this perform when the dataset grows 100x?

- What happens during partial failures across service boundaries?

- How do we roll this back if production deployment reveals problems?

- What operational metrics signal that this implementation is degrading?

These questions emerge from production scars, not documentation. They represent thinking that can’t be prompted because the prompt itself requires experience to formulate.

Recognizing When AI Solutions Are Wrong

AI generates plausible code. Professionals recognize when plausible diverges from correct:

- The generated caching looks reasonable but introduces race conditions

- The suggested refactoring breaks semantic guarantees the original code maintained

- The performance optimization trades correctness for speed

- The error handling silences failures that should propagate

- The abstraction solves the described problem but makes the actual problem harder

This skill—recognizing subtle wrongness—requires understanding not just what code does, but what it should do in context. AI has no context beyond the prompt. Professionals carry context from the entire system, the organization’s constraints, and production failure history.

Debugging When AI-Generated Code Fails

AI can’t debug its own output effectively because it has no execution model. It can suggest changes based on error messages, but it can’t reason about:

- Why the garbage collector is thrashing

- Where the memory leak originates across object graphs

- Why this specific race condition appears under production load but not in testing

- How this performance degradation emerged from the interaction of six separate components

Professionals debug by understanding execution: what the CPU is doing, how memory is managed, where I/O blocking occurs, how the runtime schedules work. This understanding comes from the feedback loop—watching code execute, measuring behavior, correlating symptoms with causes.

Maintaining Code AI Generated Yesterday

The code AI generates today becomes the maintenance burden of tomorrow. Professionals understand that maintainability isn’t syntax elegance—it’s whether future developers (including AI-assisted ones) can understand intent, modify behavior safely, and reason about consequences.

AI-generated code often optimizes for immediate functionality over long-term maintainability because prompts rarely include “make this easy to modify in six months when requirements change.” Professionals review AI output through the lens of future maintenance: Does this abstraction clarify or obscure? Will this pattern scale when similar features are added? Can someone unfamiliar with this code understand its failure modes?

The Economic Reality of AI-Accelerated Development

AI tools make junior developers dramatically more productive at generating code. Sounds like pure upside, right?

Until you measure the total lifecycle cost:

- Features ship faster but accumulate technical debt faster

- Code coverage is high but defect rates increase by 25-40%

- Development velocity looks impressive until production incidents spike

- Refactoring becomes more expensive because no one understands the AI-generated foundations

Two types of organizations:

Type 1 measures productivity by lines of code generated or features shipped per sprint. They see AI as a massive win.

Type 2 measures productivity by system reliability, operational cost, and maintenance burden. They see a more complex picture—and higher total cost.

Your value proposition shifts: From “can write code” to “can ensure AI-generated code survives production.”

That’s not a diminished role. It’s a more critical one.

The ability to generate code becomes commoditized. The ability to evaluate, refine, and maintain that code? That becomes your differentiator.

Why the Feedback Loop Can’t Be Automated

AI can participate in parts of the feedback loop:

- It can suggest implementations based on requirements

- It can generate tests based on code

- It can propose refactorings based on patterns

But it can’t close the loop because closing the loop requires:

- Execution in realistic conditions: Production load, real data volumes, actual failure scenarios

- Measurement of consequences: Performance under stress, cost implications, operational burden

- Interpretation of results: Understanding why this metric degraded, why this pattern emerged, why this assumption failed

- Refinement of thinking: Updating mental models about what works, what fails, and why

- Application to future decisions: Recognizing similar patterns in new contexts and avoiding repeated mistakes

AI can help with steps 1 and 2. Steps 3, 4, and 5 require human judgment informed by accumulated experience. This is the feedback loop David referenced—the mechanism that sharpens thinking through repeated collision with executable reality.

Real professionals master this loop. They write code (or review AI-generated code), watch it execute, measure its behavior, understand its failure modes, and refine their thinking. Each iteration strengthens their ability to recognize what will work before writing it, what will fail before deploying it, and what will cost more than it’s worth before building it.

This skill can’t be replaced because it’s not about having the right answer immediately—it’s about knowing how to find the right answer through disciplined iteration between abstract thinking and concrete execution.

Conclusion: Code Demands Honest Thinking

Yes, thinking is hard. Reasoning through constraints, evaluating trade-offs, understanding system dynamics—these require deep intellectual work. I’ve never disputed this.

But here’s what the “thinking is everything” narrative misses: code is not just the mechanical output of that thinking. Code is the form that forces thinking into honesty. It’s where vague reasoning gets brutally exposed, deferred decisions become unavoidable, and abstract consequences materialize as operational reality that costs real money and wakes you up at 3 AM.

Treating code as “just another language” undersells what programming actually does: it transforms thought from abstract possibility into executable certainty. It makes performance measurable, correctness testable, and complexity visible. It forces precision where thought allows comfortable ambiguity.

Software engineering isn’t thinking OR programming. It’s thinking made rigorous through programming. It’s the tight feedback loop where abstract reasoning and executable verification sharpen each other iteratively.

One without the other doesn’t scale:

- Thinking without executable form stays untested and often wrong

- Code without thoughtful design becomes unmaintainable complexity

- AI-generated code without understanding becomes technical debt that compounds with every sprint

- Prompt engineering without production experience becomes a liability dressed as productivity

Engineering quality emerges from the discipline of moving between abstract reasoning and concrete implementation—repeatedly, rigorously, honestly.

That’s what makes software engineering difficult. Not the typing. Not even just the thinking. But the intellectual discipline of forcing thought into executable form that survives contact with production reality.

AI can type code faster than you. It can suggest implementations, generate tests, propose refactorings. What it can’t do is learn from watching systems fail in production, understand why they failed, and apply that hard-won knowledge to prevent the next failure.

And that discipline, the feedback loop David referenced, cannot be replaced.

Series Summary

Part 1: Why Real Professionals Will Never Be Replaced by AI

Established that AI-generated code without understanding creates productivity illusions. Vibe coding collapses when code generation becomes trivial and understanding execution, failure modes, and operational cost becomes everything.

Part 2: The Feedback Loop That AI Can’t Replace

Examined the mechanisms that transform abstract thinking into operational understanding: compilers validate logic, tests expose behavioral gaps, profilers measure performance reality, production reveals deferred decisions.

Part 3: Real Professional Software Engineering in the AI Era (this article)

Explored the irreplaceable professional skillset: recognizing execution characteristics, asking questions AI can’t formulate, debugging failures AI can’t reason about, maintaining code AI generated yesterday, and understanding the economic reality where “AI productivity” often means faster technical debt accumulation.

The throughline: Real professionals will never be replaced because they’ve mastered the feedback loop: the iterative discipline of writing code, watching it fail, understanding why, and refining thinking. AI participates in parts of this loop but can’t close it. That’s where professionals remain indispensable.

Comments