Why Real Professionals Will Never Be Replaced by AI

A LinkedIn post by David Guida sparked a discussion that cuts to the bone: Is software engineering about thinking or typing?

David argued forcefully that “software engineering is NOT about writing code”—that code is merely mechanical output, the easy part, just another language. The hard part, he wrote, is thinking: “Programming is a byproduct of the thinking process. And that one, my friends, is the hard part.”

I responded with a point that needed more space than a LinkedIn comment allows:

“Strong point, but it slightly overcorrects. Yes, typing code is the easy, mechanical part. The hard part is reasoning, trade-offs, and understanding constraints. Agreed. But dismissing code as ‘just another language’ undersells its impact. Code is not only expression, it is execution, cost, failure modes, and long-term operational risk. Thinking without being forced into precise, executable form often stays vague. Writing code is where weak thinking gets exposed. Programming is a byproduct of thinking, but it is also the feedback loop that sharpens that thinking. One without the other does not scale.”

David’s response captured what I’m exploring here: “I totally agree! I must have oversimplified my thoughts. Your closing note on the feedback loop captures the reason why real professionals will never be replaced.”

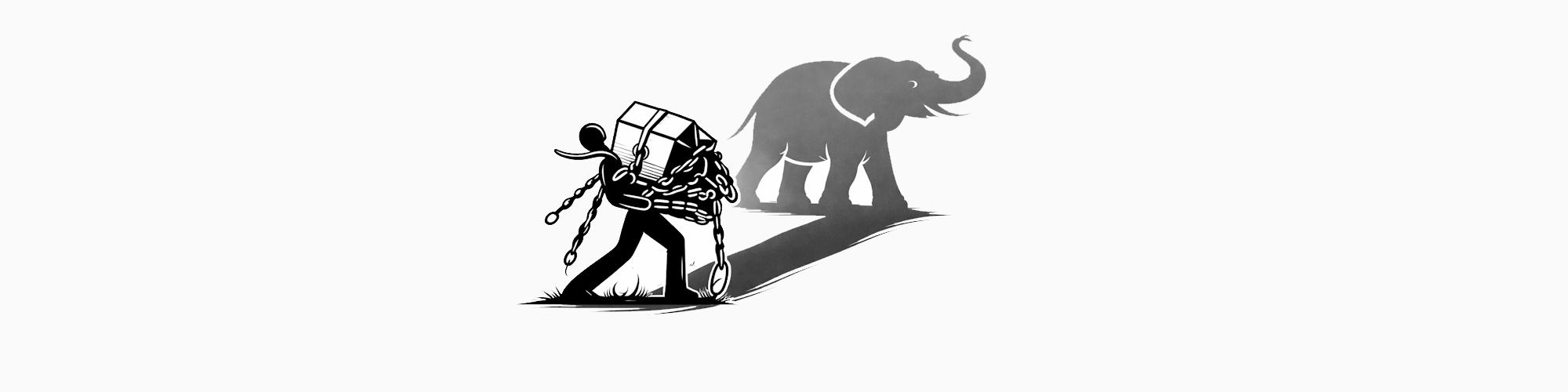

That last phrase wasn’t casual. It addresses the elephant everyone sees but few acknowledge: AI code assistants everywhere. GitHub Copilot. ChatGPT generating entire applications from prompts. The emerging “vibe coding” trend where developers describe vibes and let AI handle the dirty work.

The timing matters. We’re in an era where typing code has never been easier. AI generates syntactically correct implementations faster than any human can type.

Yet here’s what David and I both realized: This makes the feedback loop between thinking and code more critical, not less.

Ask yourself: When code generation becomes trivial, what separates you from a prompt engineer who thinks they’re building software?

The answer: Understanding what that code actually does. What it costs. Where it fails. Why it breaks under load.

This article expands on that feedback loop—the relationship between thinking and code that AI can’t replicate. It explores why AI-generated code without deep understanding creates an illusion of productivity that collapses catastrophically under production load.

Where Vague Thinking Hides

Walk through any architecture review where diagrams look perfect, responsibilities seem clear, and everyone nods in agreement. Then watch what happens when someone starts writing the actual implementation. Suddenly, the clean boundaries blur. The “simple” abstraction requires five parameters. The proposed interface doesn’t fit half the use cases. The design that felt obvious in discussion becomes ambiguous when translated to executable code.

This isn’t implementation failing design. This is design revealing itself to be incomplete.

I’ve sat through countless discussions where proposed solutions felt reasonable until we asked: “Show me the code.” Not production code—just a sketch. Suddenly, implicit assumptions surface. Missing responsibilities become visible. Performance implications emerge. The architecture that seemed solid in abstract terms crumbles when forced into compilable form.

Code demands precision that thought alone doesn’t require. When you think through a problem, your mind fills gaps unconsciously, papers over inconsistencies, and substitutes intuition for rigor. When you write code, the compiler—and eventually production—refuses to cooperate with vague intent.

Consider nullable reference types in C#. Without explicit declaration, you can mentally handwave nullability concerns:

// Vague thinking: "customer will always have a name"

public class Customer

{

public string Name { get; set; }

}

Enable nullable reference types, and the compiler forces you to confront reality:

#nullable enable

public class Customer

{

public string Name { get; set; } // Warning CS8618: Non-nullable property 'Name' must contain

// a non-null value when exiting constructor

}

This isn’t pedantry. This is thinking being forced into honest, executable form. Either you guarantee initialization, accept nullability explicitly, or redesign the constructor contract:

public class Customer

{

public Customer(string name)

{

Name = name ?? throw new ArgumentNullException(nameof(name));

}

public string Name { get; }

}

The act of writing code exposed a decision that pure thought glossed over. Was Name required or optional? The compiler didn’t care about your mental model—it demanded an explicit answer.

This happens at every level: API contracts, concurrency assumptions, resource ownership, error propagation. Abstract thinking lets you defer these decisions indefinitely. Code forces resolution.

The Vibe Coding Illusion: When AI Generates Code Without Understanding

AI code assistants accelerate the mechanical part—the typing—extraordinarily well. Describe a function in natural language, and GitHub Copilot suggests an implementation within seconds. Ask ChatGPT to build a REST API, and it generates hundreds of lines of code that compile and often run.

This feels like magic until you ask the critical question: does the generated code do what you actually need, not what you described?

I’ve reviewed pull requests where developers used AI to generate complete features. The code compiled. Tests passed. The PR description matched the implementation. Everything looked fine. Until production deployment revealed that the AI had:

- Generated thread-unsafe code for concurrent scenarios the prompt didn’t mention

- Allocated memory in hot paths without consideration for garbage collection pressure

- Implemented O(n²) algorithms where O(n) solutions existed

- Created database queries that worked with test data but failed catastrophically with production scale

- Ignored error handling edge cases that weren’t in the prompt

The AI didn’t fail—it did exactly what was asked. The developer failed by not understanding that code generated from a prompt is a starting point, not a solution. The feedback loop—write code, measure behavior, understand consequences, refine thinking—got short-circuited.

“Vibe coding” is the term emerging for this pattern: describe the vibe of what you want, let AI generate implementation, ship it if it passes basic tests. It treats code as expression divorced from execution reality. It assumes that if code compiles and handles the happy path, it’s correct.

This assumption works until it doesn’t.

And when it doesn’t? You’re stuck. No foundation for debugging. Can’t reason about performance. Can’t identify where implementation diverges from requirements. Can’t refactor intelligently.

Why? Because you don’t understand what the code actually does.

Here’s your professional advantage: You recognize what the compiler can’t tell you:

- That the generated code works for the described case but fails for the dozen edge cases you didn’t think to mention

- That the algorithm performs acceptably with 100 records but collapses with 100,000

- That the abstraction looks clean but creates maintenance nightmares

AI generates syntax. Professionals understand semantics, performance characteristics, failure modes, and operational implications. The gap between these is where “real professionals will never be replaced.”

Code Materializes Consequences

Code isn’t just structured thought—it’s thought with operational consequences. When you design an architecture, you’re reasoning about responsibilities and boundaries. When you implement it, you’re creating CPU consumption patterns, memory allocation profiles, I/O bottlenecks, and long-term maintenance burdens.

These aren’t secondary concerns. They’re the actual impact of your decisions.

Take a straightforward example: caching. In discussion, caching sounds simple—“we’ll cache frequently accessed data.” The thinking feels complete. Then you implement it:

// Looks reasonable in isolation

private readonly Dictionary<int, Customer> _cache = new();

public Customer? GetCustomer(int id)

{

if (_cache.TryGetValue(id, out var customer))

return customer;

customer = _repository.Load(id);

if (customer != null)

_cache[id] = customer;

return customer;

}

This code compiles. It runs. It even passes basic functional tests.

Then production hits.

What abstract thinking missed:

- Memory: Cache grows unbounded. No eviction policy. Memory consumption increases until the process crashes or triggers garbage collection storms that degrade response times by 300%.

- Concurrency: No synchronization. Multiple threads corrupt dictionary state, causing crashes or silent data corruption that costs hours of incident response time.

- Consistency: Cache never invalidates. Stale data persists indefinitely, creating subtle bugs that customer support escalates—costing reputation and revenue.

- Observability: No metrics. You can’t tell if caching helps or hurts performance without instrumenting separately.

Each of these issues represents thinking that felt complete in abstract terms but was fundamentally incomplete in executable reality. The “simple” caching decision materialized as:

private readonly ConcurrentDictionary<int, CacheEntry<Customer>> _cache = new();

private readonly TimeSpan _expirationWindow = TimeSpan.FromMinutes(5);

private readonly int _maxCacheSize = 10000;

public Customer? GetCustomer(int id)

{

if (_cache.TryGetValue(id, out var entry) && !entry.IsExpired(_expirationWindow))

{

Metrics.CacheHitCounter.Increment();

return entry.Value;

}

Metrics.CacheMissCounter.Increment();

var customer = _repository.Load(id);

if (customer != null)

{

if (_cache.Count >= _maxCacheSize)

EvictOldestEntry();

_cache[id] = new CacheEntry<Customer>(customer, DateTimeOffset.UtcNow);

}

return customer;

}

Code didn’t complicate a simple idea—it revealed that the idea was never actually simple. Abstract thinking deferred decisions about memory, concurrency, staleness, observability, and eviction. Code forced those decisions into concrete form where consequences become visible and measurable.

This is not implementation detail obscuring elegant design. This is reality asserting itself.

What Comes Next: The Feedback Loop AI Cannot Replicate

AI-generated code without understanding creates productivity illusions that collapse in production. Code forces abstract thinking into executable form, exposing gaps that pure reasoning glosses over. That much is clear.

But understanding the problem doesn’t answer the deeper question: What exactly is this feedback loop between code and reality, and why can’t AI replicate it? What mechanisms transform vague reasoning into concrete understanding?

The answer lies in the tools we use every day: compilers, profilers, tests, production environments. These aren’t just validation gates. They’re reality engines that do something AI fundamentally cannot: they execute your assumptions against actual constraints and report back with unfiltered truth.

In the next part of this series, we’ll explore how these mechanisms form a cognitive feedback loop that sharpens professional thinking in ways no AI prompt can simulate.

Comments